The best settings for Watch Dogs: Legion

The most recent Watch Dogs has an ambitious idea at its center: fill the shoes of any NPC in a sprawling, hackable open world. And like most games, that bold idea has to be supported by layers of rich tech. After spending plenty of time with the game after release, we've cracked the code on which settings you should tweak to achieve the best performance.

The Disrupt engine drives the game and makes for some impressive visuals, but the pretty scenes don’t come cheap. While I found the game passable on all the GPUs I tested the game on, use of lower-end cards means being okay with some compromise.

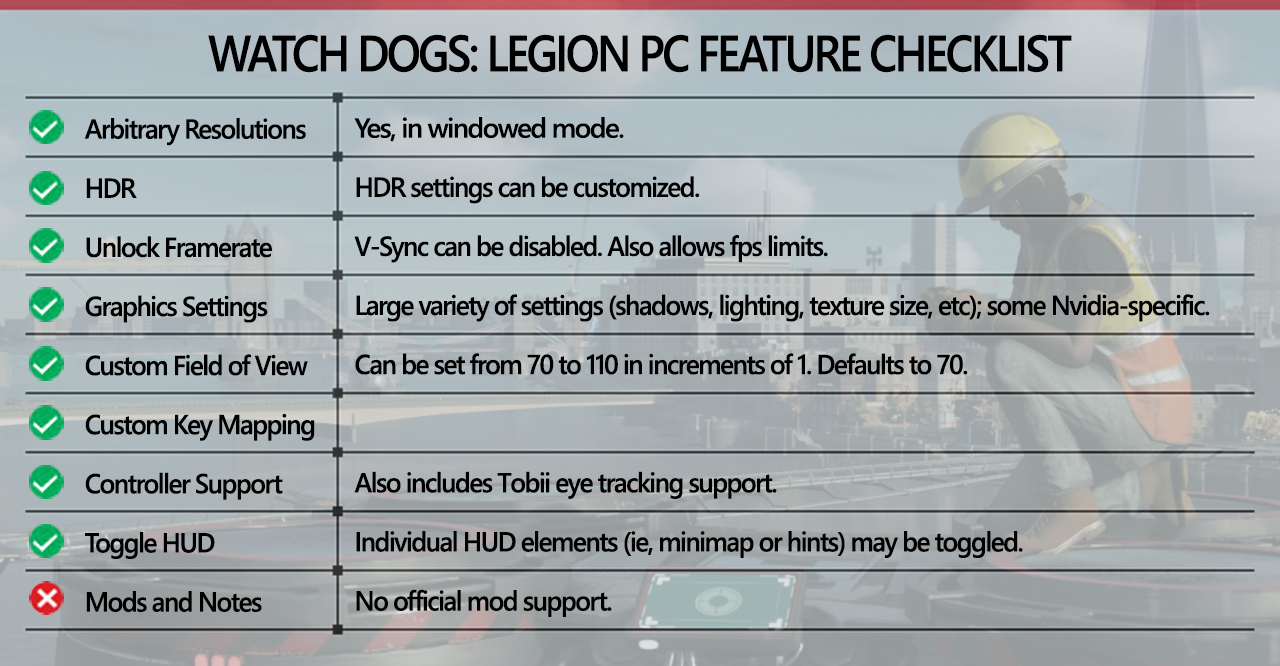

Features overview

Watch Dogs has many of the expected video and control settings that one might expect from a similar title. The control scheme isn’t overwhelming, and is for the most part, intuitive.

As an aside, I’d like to point out that while motherboard and hardware RGB lighting can be obnoxious at times, having a way to synchronize the RGB lighting in your PC fits Watch Dogs better than most other games. The aesthetic of DedSec embraces blacks and dark grays accented with bright colors. As it so happens, that’s often the same color scheme you’ll find on many modern motherboards and cases.

Optimizing

A word on our sponsor

As our partner for these detailed performance analyses, MSI provided the hardware we needed to test Watch Dogs on different AMD and Nvidia GPUs.

A few things stood out when I was benchmarking. Like a few other games we like, Watch Dogs: Legion gives you hints about what settings will have the biggest and smallest effects on frame rate. The game can also auto-detect settings for you, which is nice, even if you choose to customize settings from the detected settings. But the big plus for me was the bar at the bottom right of the screen which showed the available video memory available and the expected amount of memory that will be needed with the current settings. It eliminates a lot of guesswork.

Generally, this bar can be a first good guide when dialing settings in. As much as GPU cores and clocks matter, the amount of video memory can have a big impact on frame rates. VRAM is quite fast, and more importantly, on the graphics card. The system memory (RAM) will be tasked with holding any information that won’t fit into the video memory. The problem is that the GPU cannot use data from the system RAM. The big performance hit from having too little video memory is the transfer of data from system RAM to the video memory before the GPU can work on it. While this may not take much time in absolute terms, every millisecond matters when it comes to the rendering pipeline, and waiting for data I/O even over PCIe Gen 3 or 4 will be slower than accessing the local memory on the graphics card.

In short, when in doubt, check that bar and keep it in the blue.

The settings UI also estimates the load on both the CPU and GPU with the current configuration. While it doesn’t give a numeric readout like the video memory bar, keeping the stress on the GPU will mean it will render each frame a little faster. Considering most settings don’t have a numeric value—instead relying on one of “Off,” “Low,” “Medium,” “High,” “Very High,” or “Ultra”—it can be yet another way to estimate performance.

Tweak these settings

Resolution and Render Scaling

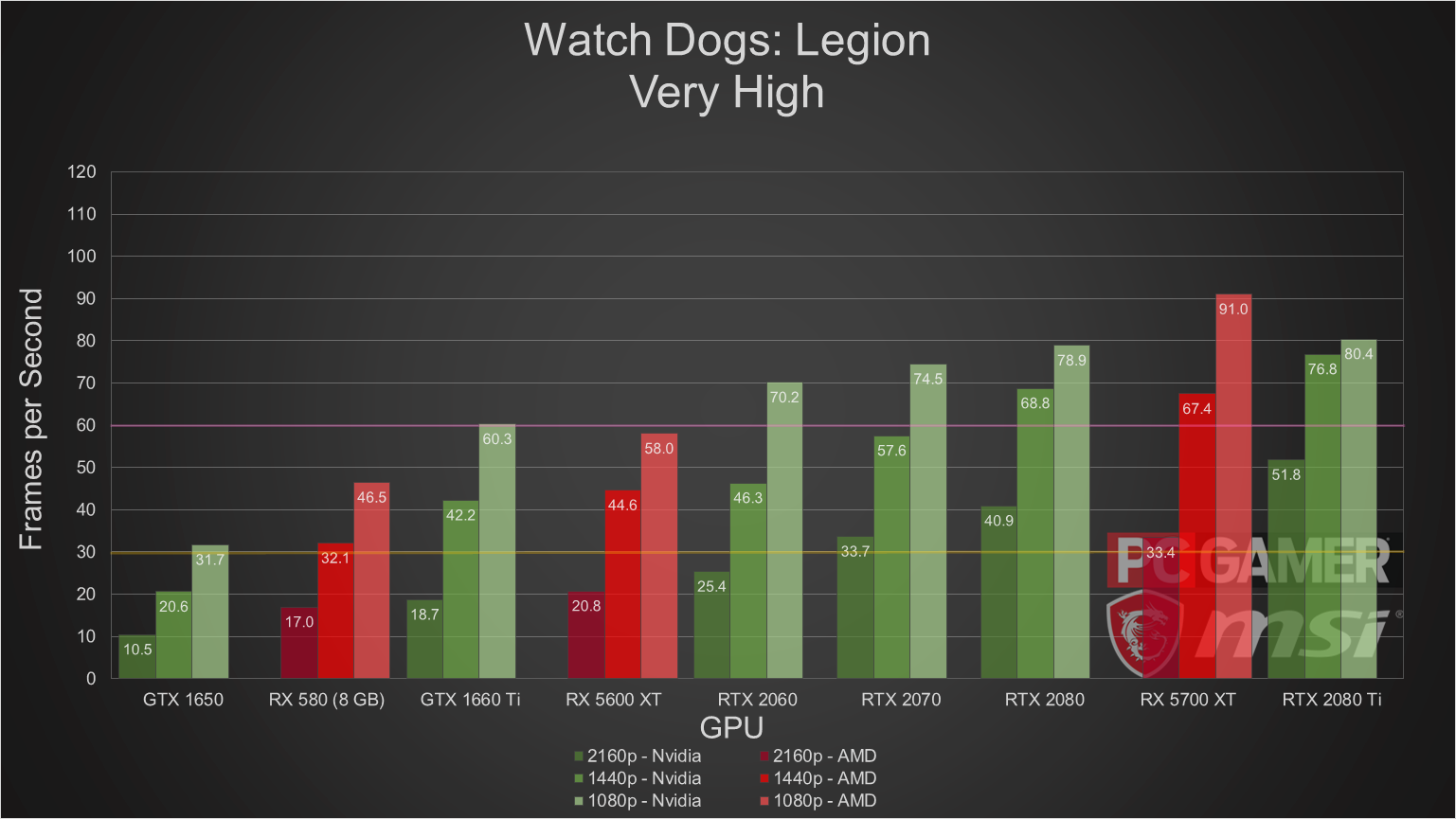

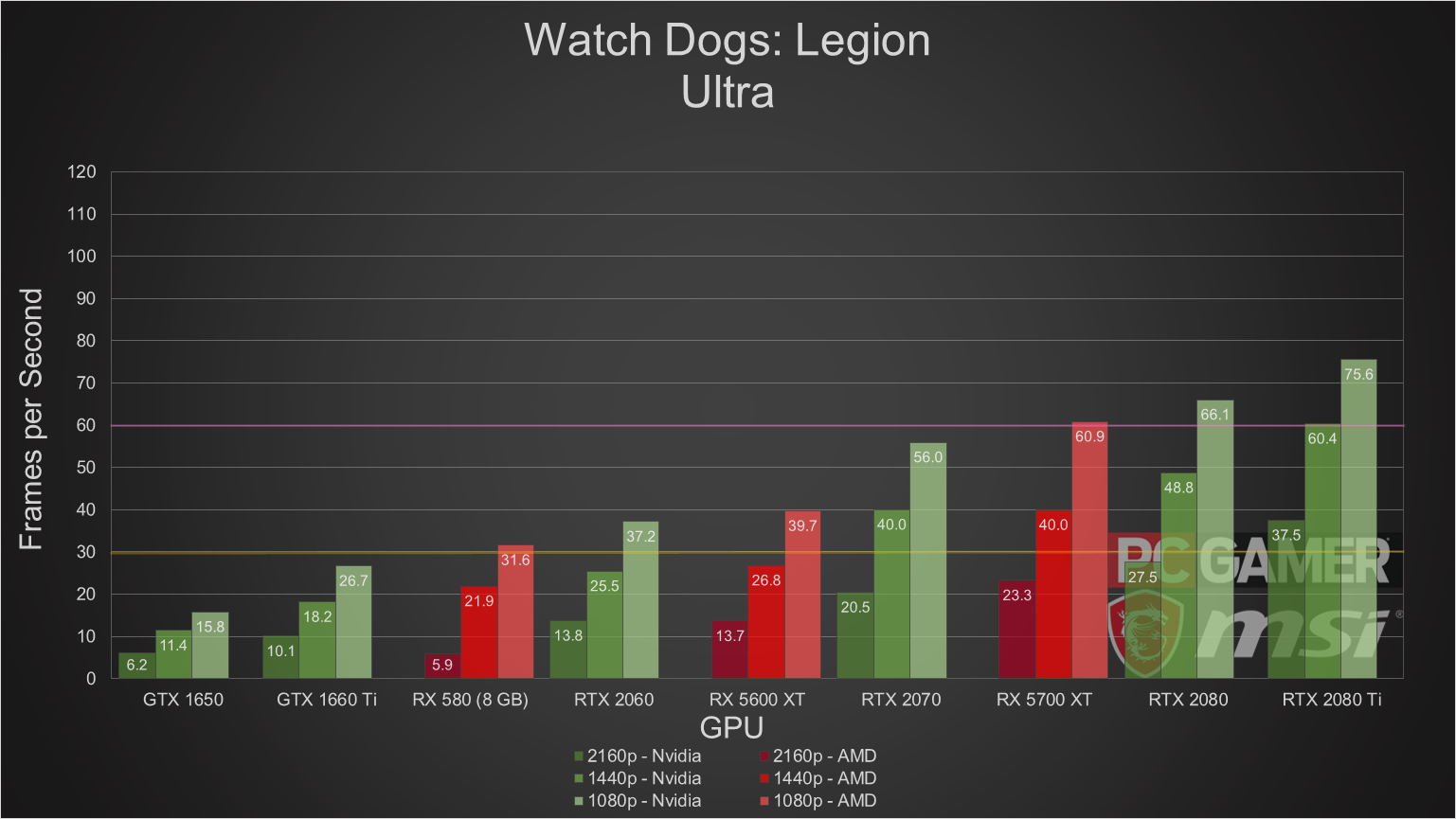

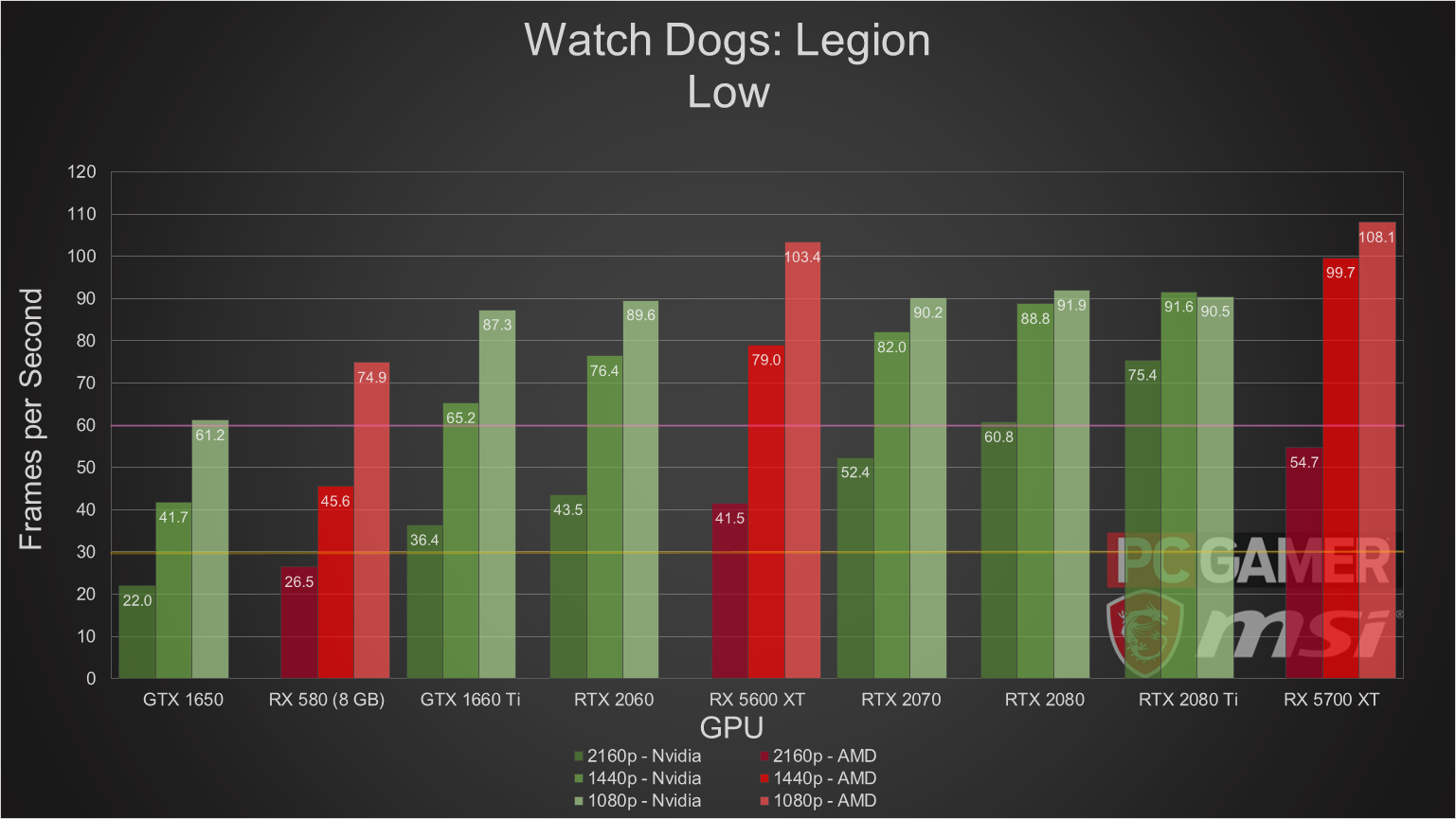

Like Microsoft Flight Simulator, one of the biggest determinants of performance is resolution. As fast as GPUs operate with parallel computation, the fact remains that if the number of pixels to be rendered outnumber the cores available to the rendering pipeline, each core will have to make multiple passes to finish the frame. If you have a lower-end GPU like a GTX 1650, don’t expect to have anything near a playable experience at 4K. That said, performance even on the lowly GTX 1650 can (just barely) break 30 fps in the benchmark on Very High at 1080p.

The other setting, temporal render scaling, also affects the rendered resolution. By default, this is set to 100 for all setting presets, with 100 representing the set resolution (i.e. 1920x1080). However, if you drop it by moving the slider left, you’ll notice that the UI tells you what effective resolution for each frame before it is upscaled and anti-aliased. This can be handy if you’re looking to get extra fps, and works especially well if you’re trying to play at 1440p or 2160p. However, since this is effectively digital zoom at work, the setting has diminishing visual returns. I don’t recommend dropping this setting below 80, as the image starts becoming blurry past that point. You can set this value in increments of 5, so experimenting with it will offer some performance headroom if you really need it.

Shadows

The game has a lot of dynamic lighting and dark spaces, so shadows will be cast everywhere. Calculating how shadows are projected can be quite expensive. In fact, the shadows setting is one of the most expensive settings from a computational sense. Lower settings will result in sharper shadows that look more exact. On the flip side, higher quality shadows will reflect more life-like light scattering and diffusion that real shadows tend to have. The reference image in the settings is the shadows cast by a tree’s leaves. Because Watch Dogs takes place in the bustling city of London, there are plenty of shadows to be cast by people, vehicles, trees, and buildings. And that’s just on the streets.

Texture quality should be your first stop.

Texture Quality

If you’re looking to bring down the video memory requirements to a more manageable level, texture quality should be your first stop. Because textures are generally just images wrapped around the in-game objects and models, this results in hundreds or thousands of small images being kept in video memory. Now think about the image size for each texture. Texture quality is, essentially, the image size used for each texture and can be seen as a multiplier for every texture used in rendering a scene.

Due to the nature of the setting, dropping the texture quality by even one notch brings big savings in VRAM usage. As long as you can keep this setting at Medium or higher, the game will still look good. If you have the VRAM to spare, having textures at Very High or Ultra will make the game look great.

Ray Tracing

Watch Dogs allows you to enable ray-traced reflections if you like. In short, ray-traced reflections will make puddles reflect nearby objects and shiny surfaces look more realistic. The effect looks great, but it also is computationally expensive. I wouldn’t recommend it without a GPU with dedicated ray-tracing hardware. Doing these computations without dedicated hardware will result in a drop—noticeable best, unplayable at worst—in framerate. Currently, only Nvidia’s RTX 20 line and AMD’s RX 6000 series have dedicated hardware for ray-tracing computation.

If you choose to enable ray tracing without a GPU with hardware support, you will have to make sacrifices elsewhere in order to keep the game playable. And there’s a very good chance you won’t be happy with the trade.

DLSS (Nvidia only)

Deep learning super-sampling (DLSS) is available for Nvidia GPUs. This feature uses machine learning models to more quickly calculate the object and shader math that would otherwise have to be calculated “the hard way” each frame. The end result of this setting is you get better texture and object appearance and higher frame rates that you otherwise might.

This setting has four options: Off, Performance, Quality, Balanced, and Ultra-Performance. For Nvidia 2080-series GPUs, I’d recommend Quality or Balanced. For the 2070-series GPUs, Balanced would be a good fit. Performance would work well for cards belonging to the 2060-series or lower. If you’re unsure, the loads from video settings are cumulative, don’t be afraid to run the benchmark with each setting.

Our benchmarks

Helpfully, Watch Dogs has a built-in benchmark you can use for testing. When the benchmark is complete, the game even gives you a detailed readout of frames per second and frame times (the time it takes for each frame to finish all the steps in the render pipeline). However, for these measurements, I recorded the performance data with CapFrameX, an open-source performance monitor. While Watch Dogs allows you to save benchmarks, the output is in the form of an HTML page, which is great for one card. For testing multiple cards, it was far more preferable to use CapFrameX as it records more in-depth data and is easily output to CSV.

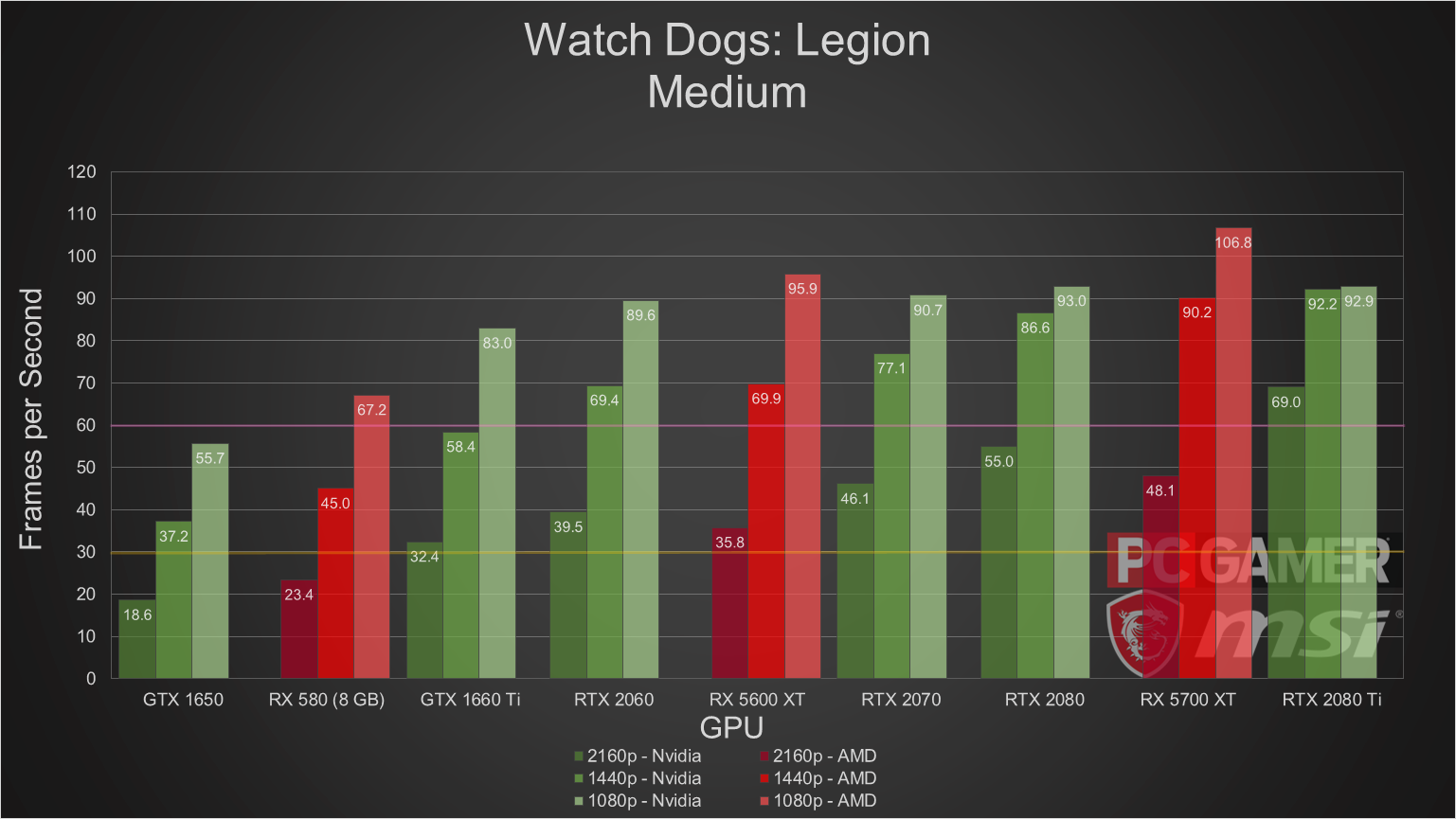

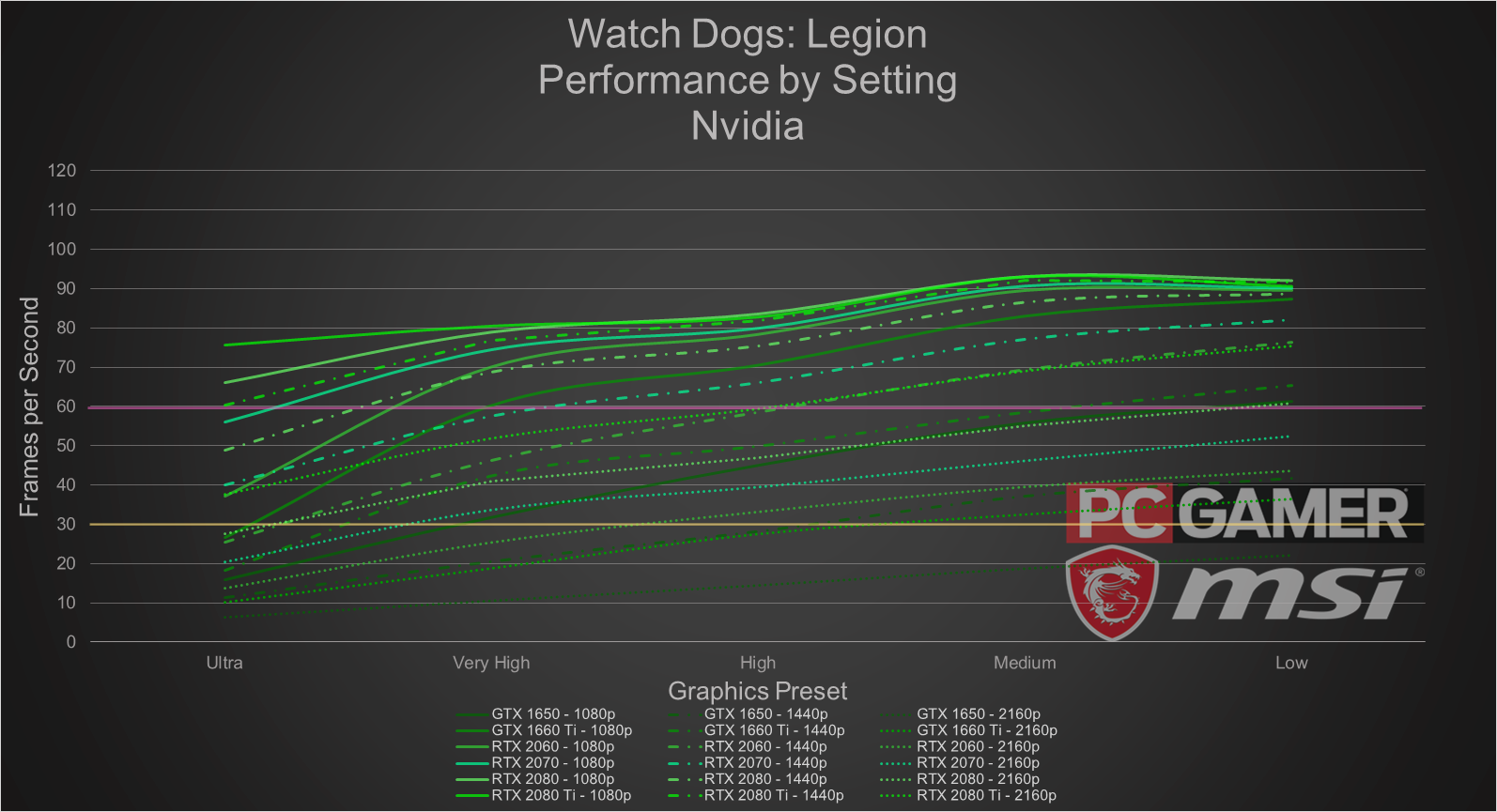

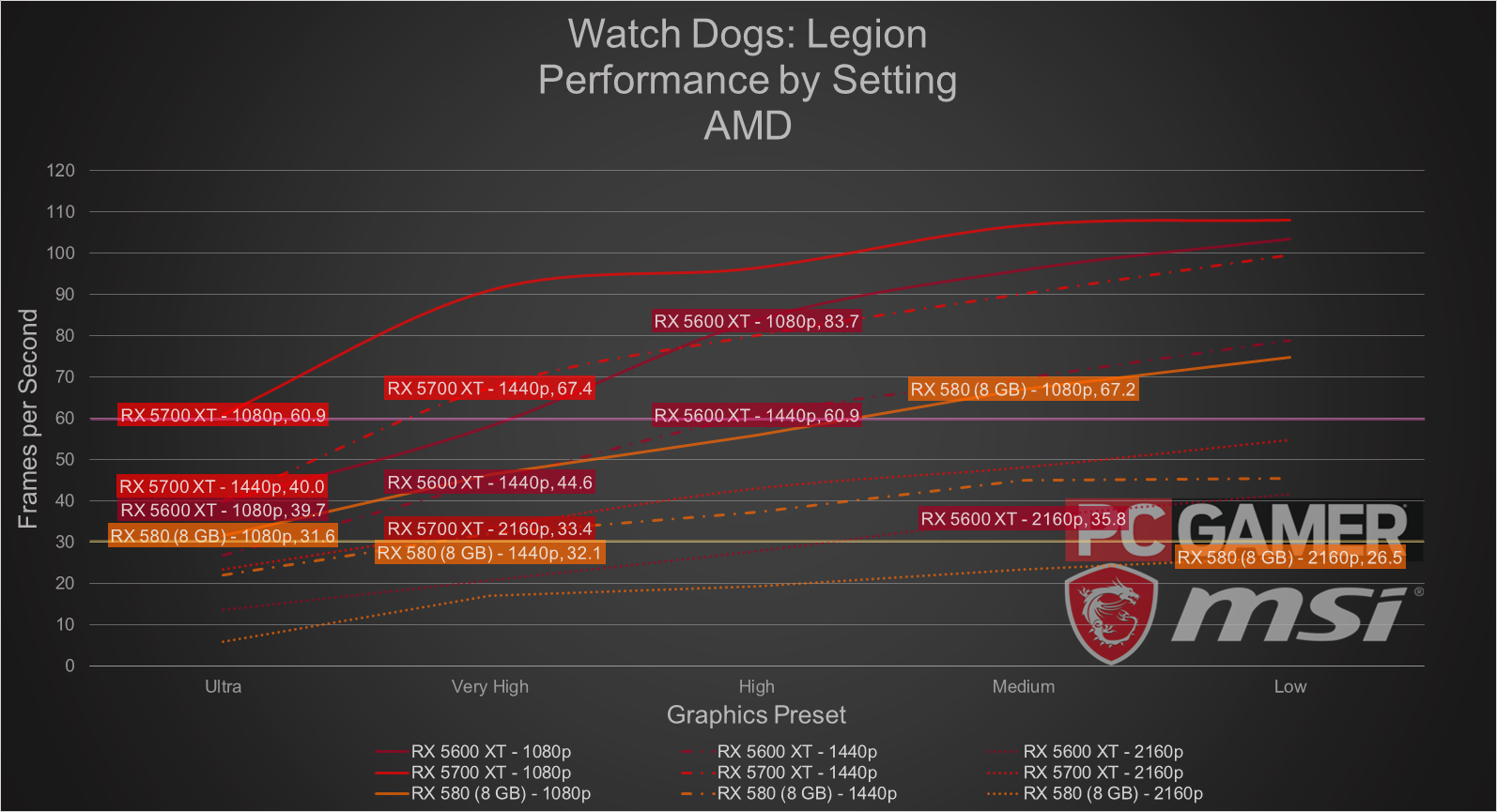

For each video card, I ran Watch Dogs at 1080p, 1440p, and 2160p at each of the quality presets, as detailed below. All the tests were run with DirectX 12 mode in fullscreen.

For almost every GPU tested, Watch Dogs was able to break 30 fps at reasonably high settings, so long as an appropriate resolution is chosen. Surprisingly, the RX 5700 XT outperformed the RTX 2080 at 1080p, though this was without using DLSS, which hands Nvidia GPUs a solid advantage.

None of the GPUs were able to reach to the 144 fps mark, the ceiling for many high-end gaming monitors. However, many of the cards were able to surpass or get reasonably close to the 60 fps mark. The RTX 2080 Ti at 4K with Very High settings was able to maintain a solid 60 fps when DLSS was enabled. Without DLSS, the 2080 Ti topped out at just under 52 fps.

Looking at the data, one thing becomes abundantly clear: Every one of these cards can deliver 30 or more frames per second in Watch Dogs with settings of Very High or better at 1080p. But, predictably, the ability to keep frame rates high begins falling off as you move up the resolution scale.

The hardware we recommend

That said, Watch Dogs is perfect for testing out native 4K on a budget PC. A GTX 1660 Ti or RX 5600 XT is enough to get that "good-to-great" 60+ fps experience at 4K, with room to spare. You can even surpass 144fps if you lower anti-aliasing, which is less important when running Valorant at 4K.

Looking for a 4K monitor? PC Gamer's recommendation right now is the Acer Predator XB273K, which offers 4K/144 Hz/HDR gaming at a relatively affordable price. I use one for these benchmarking tests actually.

Laptop: And last but not least, our laptop recommendation. As I've said, Valorant runs smoothly on low-end hardware. Provided you can find a strong internet connection, you can probably run it on any old laptop. That said, I recommend MSI's GE66 Raider line. It's relatively inexpensive, and even the low-end options ship with 144Hz panels. (You can also upgrade to 240Hz and 300Hz, if you'd like.)

Conclusion

Watch Dogs: Legion, while demanding at the higher resolutions, offers a great visual experience even on modest GPUs. While Nvidia enjoys a clear advantage with DLSS, Team Red isn’t far behind when it comes to the raw, preset settings. It’s actually a welcome outcome given that the game opens up with a quick flash of the Nvidia RTX branding logo.

If you’ve got an RTX video card, you won’t really have much of a problem with framerates, especially if you choose to turn on DLSS. Turning on V-Sync with a G-Sync or FreeSync monitor will also help smooth things out, as all the cards tested here can handle a steady framerate well above 30 fps, even if only a few can break 60.

One final thing: Watch Dogs: Legion is on sale through UConnect on Black Friday. London’s calling, and DedSec is answering.

Other hardware to consider

Desktops, motherboards, notebooks

MSI MEG Z390 Godlike

MSI MEG X570 Godlike

MSI Trident X 9SD-021US

MSI GE75 Raider 85G

MSI GS75 Stealth 203

MSI GL63 8SE-209

Nvidia GPUs

MSI RTX 2080 Ti Duke 11G OC

MSI RTX 2080 Super Gaming X Trio

MSI RTX 2080 Duke 8G OC

MSI RTX 2070 Super Gaming X Trio

MSI RTX 2070 Gaming Z 8G

MSI RTX 2060 Super Gaming X

MSI RTX 2060 Gaming Z 8G

MSI GTX 1660 Ti Gaming X 6G

MSI GTX 1660 Gaming X 6G

MSI GTX 1650 Gaming X 4G

AMD GPUs

MSI Radeon RX 5700 XT

MSI Radeon RX 5700

MSI RX Vega 56 Air Boost 8G

MSI RX 590 Armor 8G OC

MSI RX 570 Gaming X 4G

Our testing regimen

Best Settings is our guide to getting the best experience out of popular, hardware-demanding games. Our objective is to provide the most accurate advice that will benefit the most people. To do that, we focus on testing primarily at 1080p, the resolution that the vast majority of PC gamers play at. We test a set of representative GPUs that cover the high, mid, and budget ranges, plugging these GPUs into a test bed that includes an Intel Core i7-8700K with an NZXT Kraken cooler, 16GB of RAM, and a 500GB Samsung 960 EVO m.2 SSD. We used the latest Nvidia and AMD drivers at the time of writing (446.14 and 20.4.2 respectively).

Post a Comment