First person shooters have been getting perspective wrong all along

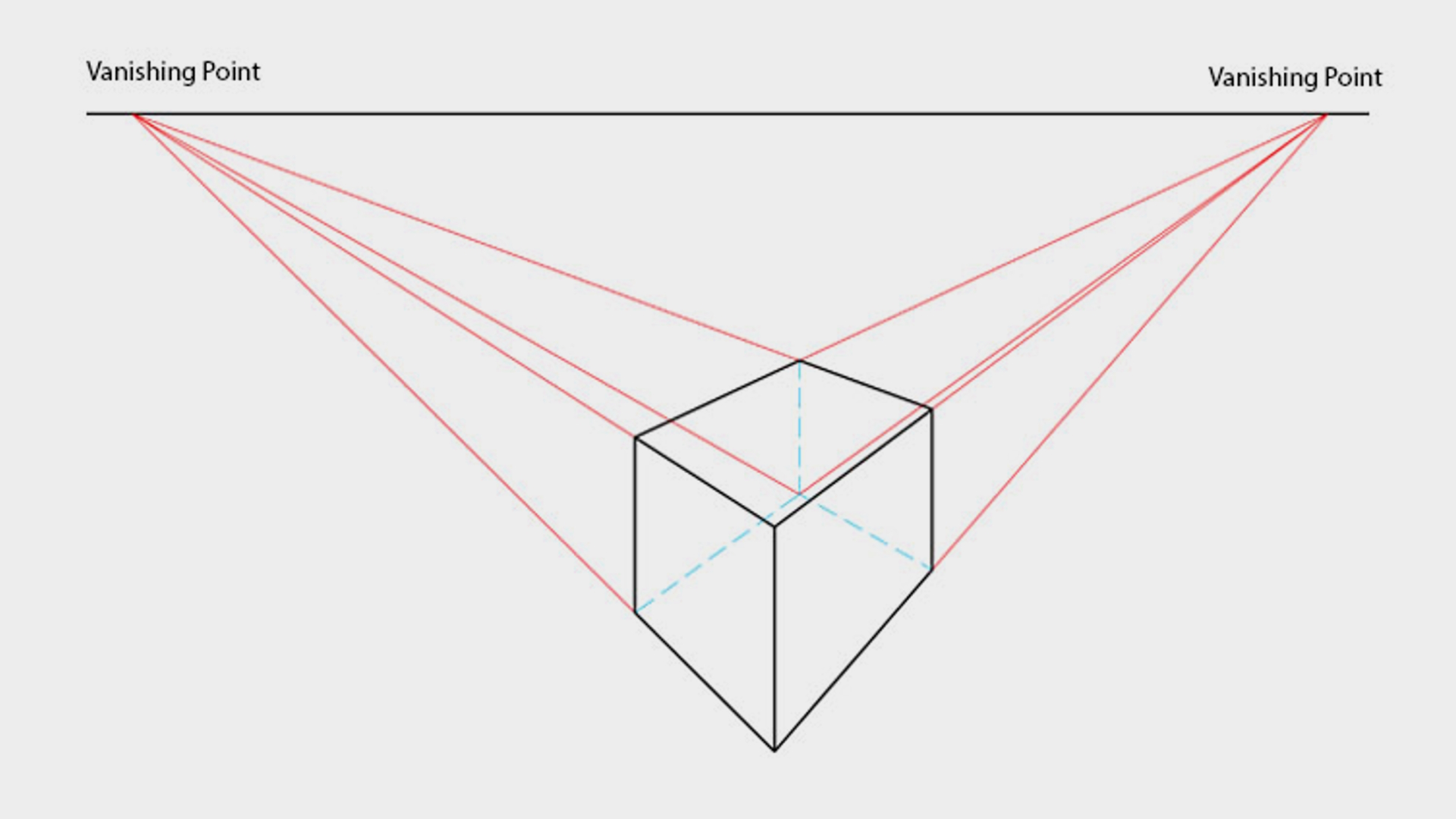

Linear perspective is the overarching method of representing 3D objects on a 2D plane, just like how games show up on your gaming monitor. It encompasses the one, two, and three point perspectives you probably learned about in school, and has been the major leading perspectival schema in art since it's conception, way back in the fifteenth century.

Now, Robert Pepperell of the Cardiff Metropolitan University in Wales (via New Scientist) is making us question everything we understand about how perspective should be represented in video games.

The current standard of linear perspective was developed by architect, poet, and humanist, Leon Battista Alberti. He's widely considered the father of Early Renaissance in the art world, and popularised the linear perspective techniques which are still used by architects and artists alike today (game artists included) when trying to capture accuracy and realism in a scene.

If you're unfamiliar, it's a technique that gives the illusion of depth and volume as you draw parallel lines back from each corner of a 2D object to merge at certain points (the vanishing points); there's one vanishing point for one point perspective, two in two point perspective—you get the idea.

The alternative is non-linear perspective, which relies on atmospheric cues to relay depth, volume and position, such as colour value and different levels of detail depending on how far away an object is. This is something you'll see more in expressionist artwork, as it's more importance driven than about anatomical correctness.

Non-linear perspectives aren't a new thing for video games, either. Your first exposure to non-linear graphical projections might have been the curvilinear perspective, that time you discovered the freaky fish-eye cheat in The Sims. Plenty of games use stylised perspectives too, which warp the traditional linear perspectives to make things look ultra trippy because why the heck not. It's a game, why should it look realistic?

Pepperell and co. at the Cardiff Metropolitan University have discovered that a non-linear perspective may be the way to go when it comes to games. More specifically, using a mathematical model they have been able to develop new software that can adjust a game's perspective to mimic how the brain really sees a scene.

What that comes down to is making a scene more attuned to the way our brain perceives light hitting our curved retinae, something that a linear perspective cant quite capture.

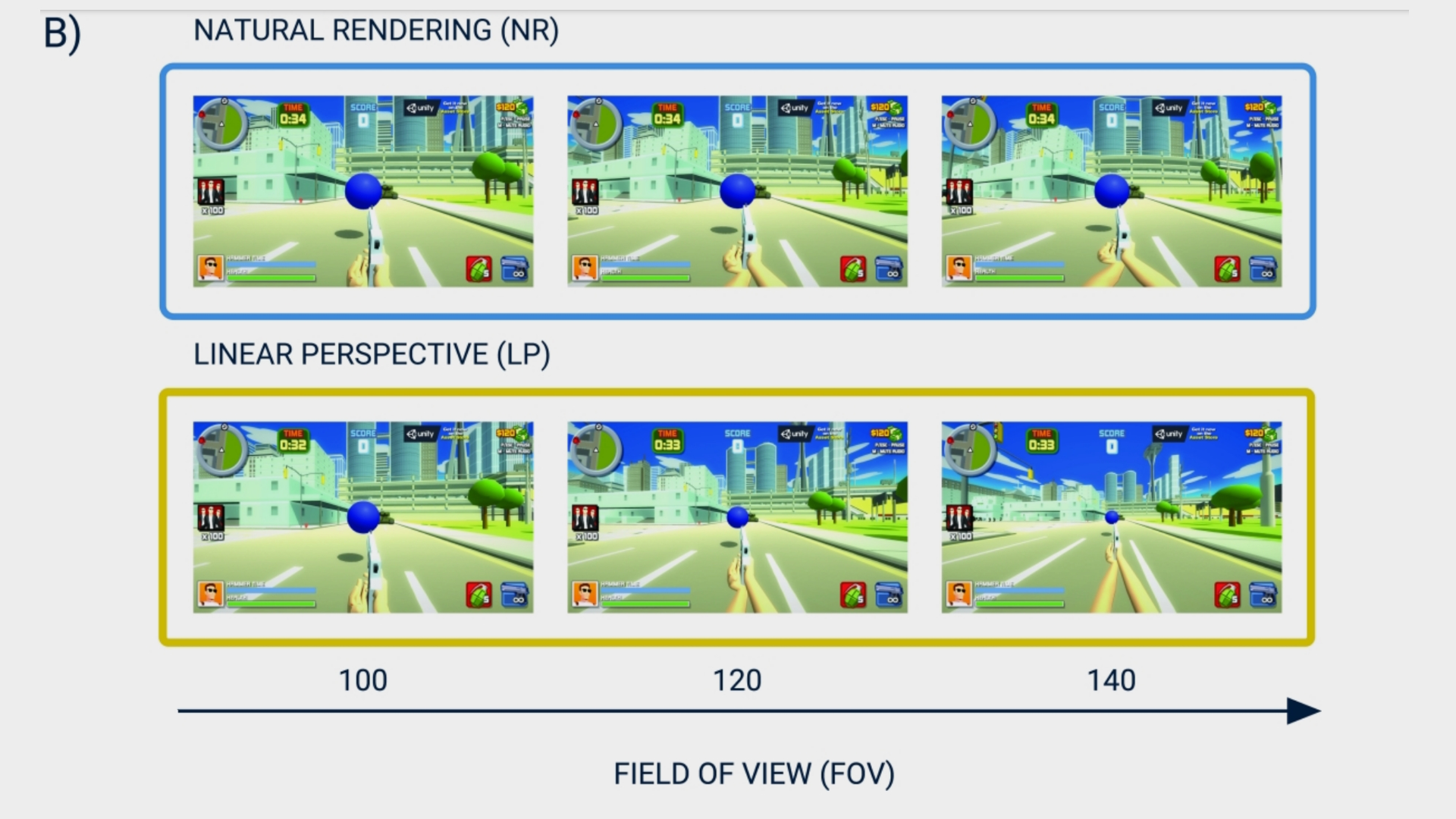

Taking screenshots from action game Hammer 2, Pepperell's crew presented just under 200 participants with two different perspectives—the original, and the more natural one modded in by their software—and asked them to guess the distance of a blue ball in a scene.

They were tested in a range of formats and a varying field of view, and had to answer in multiple choice each time over 72 different images.

The research showed that people have an issue with overestimation with the standard linear perspective, whereas the natural perspective did make it slightly easier to determine the distance, especially when it came to wider field of view.

Pepperell's research has come to a head with startup company, FovoTec. The front page claims the software can deliver "a far greater field of view and a more accurate sense of depth, space, and movement."

So if you've been trying to use a wider field of view in games with your super curvy wide angle monitor, and have been having trouble with the perspective, then Pepperell may just have found one impressive solution. It should also make for a more realistic, immersive take on the first-person gaming perspective, too.

The research correlates with Chiara Saracini's work at the Catholic University of Maule, which says computer models have been totally messing up our perception of distance. Though she has her reservations that this is the definitive answer to our FOV woes, since there was still a fair deal of overestimation happening. Still, things can only get better from here.

Post a Comment